How Netflix, Stripe, and GitLab Engineered Observability Culture

Reverse engineering the invisible foundation behind product reliability

Across organizations selling software, observability tools serve as the resource teams use to define value and assess product health.

However, the truth is that observability tools, without observability culture, do very little to drive product quality.

What Exactly is Observability Culture?

Observability culture is the collective mindset and shared practice of considering telemetry in every decision.

But cultivating this culture can feel like a bit of a black box.

After studying the observability practices of some of the industry’s top engineering organizations, I uncovered clear patterns that drive product stability at scale and why infusing proactive telemetry into a team’s DNA is critical for modern product reliability.

What Does Observability Culture Look Like in Practice?

Principles I’ve seen that drive observability culture can be boiled down to 3 key principles.

Metrics that are obvious and meaningful

Monitoring embedded into daily rituals

Telemetry treated as the single source of truth

Metrics that are obvious and meaningful

No team can operate under an avalanche of metrics. In order to build a culture informed by observability, it must first be easily understood.

One simple way to do this that many elite teams have embraced is a strategy of using a golden metric to simplify observability.

A single golden metric can help in surfacing business critical issues. While the specific metric varies product to product depending on the case, selecting a key indicator makes assessing general product health much simpler.

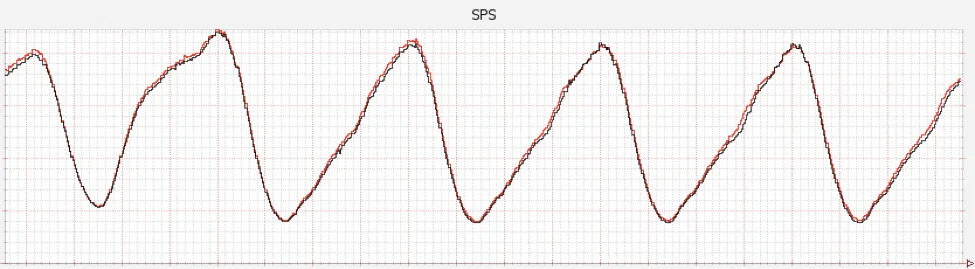

Netflix Engineering, in their tech blog, outlines how they use Streams per Second (SPS) as their primary service health metric. Since streaming is core to their business, an irregularity in SPS is a strong signal that they’re experiencing a significant problem.

By embedding this metric into company-wide language, going so far as to categorize all production incidents as “SPS impacting” or “not SPS impacting”, they frame observability as a shared cultural touchstone across teams.

This clarity is critical. Just like how a doctor relies on pulse and blood pressure as primary indicators of our body’s health, teams can trust a golden metric to get a broad sense of their product’s health.

However, our doctor probably could run more tests than pulse and blood pressure if they needed to. Similarly, more complex metrics inform deeper investigation.

This is likely too product specific to generalize, but ideally for these smaller metrics, the reasoning behind following a metric should ideally be largely understandable to non-technical team members. This opens up access to our source of truth (more on this later).

Unfortunately, even well-intentioned teams can sabotage their observability culture through alert fatigue. When every service anomaly triggers a page, teams quickly learn to ignore or delay responding to alerts, eroding trust in your team's monitoring systems.

The golden metric approach directly combats this by establishing clear hierarchy: alerts tied to your core business metric demand immediate attention, while secondary metrics can trigger notifications that are reviewed during regular business hours. This prevents the all-too-common scenario where engineers become numb to constant noise, ultimately missing the signals that actually matter for user experience and business impact.

When simplified, metrics and dashboards become more easily referenced, conversationally and otherwise, building a foundation for observability minded decision making across the organization.

Monitoring Embedded in Rituals

There’s no way to be randomly attentive to observability.

As workloads increase, vibe-based investment in telemetry metrics ends up just becoming convenience-based. In order to be proactive, it’s useful to embed consideration of observability into defined rituals.

Stand-ups, retros, and incident reviews are all rituals that can be used as an opportunity to survey dashboards, key metrics, and relevant traces.

GitLab showcases the power of this approach through their experience operationalizing Observability-Based Performance Testing. Their aim was to embed observability culture deeply into operations to proactively and systematically identify potential performance problems.

In an initial implementation, they primarily focused on adapting rituals to promote observability culture. In their canary group, 5 minutes of daily standup was carved out to scan dashboards and cut tickets for any anomalies.

They attributed this simple discipline as being responsible for “maintaining a 99.999% uptime during 10x growth period” and consistently meeting performance KPIs. A testament to how consistently proactive observability practices enable performance at scale.

Non-event based rituals can be used as well. Adopting Observability as Code (OaC) intuitively ingrains observability changes into existing development workflows, ensuring they are reviewed and well documented like a code contribution.

Some teams even go so far as to weave observability directly into the service of their core product. For example, eng teams like Ritual use triggers in their feature flags to tie components of their product to real-time monitors.

Although each team’s implementation will differ, establishing consistent observability routines serve to build a more proactive culture, shifting teams towards prevention rather than firefighting.

Telemetry is the source of truth

Without telemetry grounding every conversation about product usage and user behavior, teams often fall back on subjective assumptions. While anecdotal feedback has its place, the overhead of reconciling opinions simply isn’t worth it, and risks producing decisions that don’t align with actual customer needs.

That’s why telemetry must be treated as a first-class citizen: the definitive source of truth for an organization to rely on.

Stripe emphasizes this point in their AWS blog detailing their transition from a traditional time-series database to cloud-hosted Prometheus and Grafana for storing observability metrics.

One key decision to note about the migration process is that Stripe didn’t just switch metrics from one service to another and expect their engineers to adapt overnight. They recognized that rapid, mandatory migration would risk damaging their observability culture and undermine the perceived reliability and importance of these metrics.

Instead, they opted to write metrics to both the old and new services simultaneously, asking engineering teams to manually verify parity. They gradually built confidence using team-wide trainings, internal documentation practices, office hours, and internal champions, ensuring that respect for observability as the trusted source of truth endured across their engineering team.

This principle even extends beyond engineering teams.

As the definitive source of truth for product usage, observability metrics are equally critical to technical support, product, and customer success teams. By championing observability as a shared source of truth, every team gains a powerful tool to advocate effectively on level footing.

By consistently applying a unified, metrics-driven view as the single source of truth, observability culture empowers an entire organization to discuss product usage in lock-step and maintain cohesive, objective communication across all functions.

So, now what?

Observability culture is not a one-time project, but an evolving mindset.

Across these companies, despite differing implementations, the principles remain consistent: prioritize obvious metrics, embed monitoring into rituals, and treat telemetry as the ultimate source of truth.

Netflix, Stripe, and GitLab earned their reputations as elite engineering organizations partly because they mastered this culture. Their systems reliability and product stability at scale show that an investment in observability culture is one of the highest-leverage moves any team can make.